Guests

Deborah Caldwell-Stone

American Library Association

Ciara Eastell

Libraries Unlimited

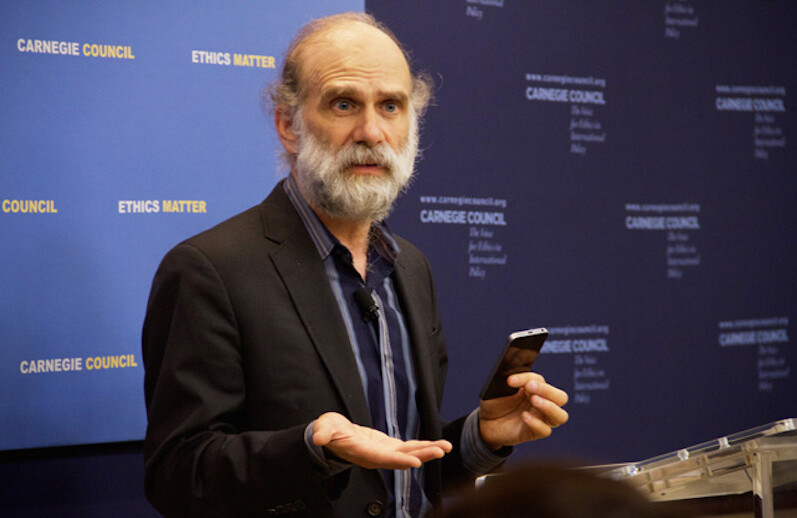

Bruce Schneier

Berkman Klein Center for Internet & Society, Harvard Kennedy School; IBM Resilient

Hosted By

Albert Tucker

Carnegie UK Trust

Joel H. Rosenthal

President, Carnegie Council for Ethics in International Affairs

Related Links

This seminar, sponsored by the Carnegie UK Trust as part of a study tour on the future of public libraries, explores privacy and the role that libraries can play in this arena. Keynote speaker Bruce Schneier paints a bleak picture of the erosion of privacy, since we are all constantly creating a data trail. Yet he declares that none of this is irreversible. It's a question of changing our laws, policies, and norms, and libraries can help.

JOEL ROSENTHAL: I want to begin by welcoming our colleagues from the Carnegie UK Trust. This event is part of a study tour organized by the Trust inquiring into the future of public libraries, and as a subset of that question, the issue of privacy in the digital age. We began the discussion yesterday at the offices of the Carnegie Corporation and at the New York Public Library, and we're delighted for the opportunity to expand it in a broader discussion with all of you today.

One of the advantages of being a Carnegie organization is that we benefit from the ideas, inspirations, and good works of our sister institutions. The Carnegie UK Trust is an extraordinary organization based in Dunfermline, Scotland, the birthplace of Andrew Carnegie. The Trust's programs influence policy and deliver innovative ideas to improve well-being across the United Kingdom and Ireland.

I particularly admire the Trust's creative effort in community engagement at the local and municipal levels. Their motto captures perfectly the Carnegie ethos: "Changing minds, changing lives." Their work is fact-based, inclusive, open, and pragmatic, and this, I think, is the Carnegie signature.

This meeting gives me the opportunity to publicly thank the leadership of the Trust, and although they are not present today, outgoing Chairman Angus Hogg and Executive Director Martyn Evans have demonstrated admirable stewardship of the Carnegie legacy. Trustee Albert Tucker, who is here, and who will speak in a moment, is representative of this impressive leadership team.

I would also like to recognize Anna Grant, the head liaison of the study tour, who has done an excellent job in organizing the program. Thank you, Anna.

Four Carnegie Council trustees are here today, and I'd like to recognize them as well: Rich Edlin, Jonathan Gage, David Hunt, and Bob Perlman. Andrew Carnegie believed deeply in the leadership capacity of trustees who would be true to the founding principles of the organization and yet always ready to adapt to new challenges. I think he would be pleased to see all of you here today working in this vein more than a hundred years after our founding.

I have been asked to say a few words of introduction to set the frame for our discussion. The real experts will follow me, but I want to offer just three observations very briefly to get us started.

First, a word about the moment that we are in now. We all know that Apple can track our movements through our mobile devices; this includes how many steps you take per day, where you go, how many flights of stairs you climb. Facebook can record our interests in news, hobbies, travel, and consumer purchases. Google can track our Internet searches on every subject professional and personal. Amazon can sell you a voice recognition personal assistant called Echo that listens to every conversation and responds to your commands and requests. Amazon can also sell you the Nest home monitoring device, tracking the temperature in your home, your electricity usage, and the security video of who comes to your door. Music vendors like Pandora catalog the music that you listen to. National governments compile databases on taxes, communications, and security threats. Local governments and their agencies, such as libraries, know which books you read. Most organizations can tell whether or not you open the e-mails and electronic newsletters they send you. They can see which stories you click on and how long you spend reading each one. All of this information is collected and stored digitally.

In his book, Free Speech: Ten Principles for a Connected World, Timothy Garton Ash sums it up perfectly in one phrase. He says, "We are all tagged pigeons now." Several other writers invoke another image to make the same point; they mention Jeremy Bentham's Panopticon. The Panopticon was Bentham's design for a prison where all the inmates could be watched by a single watchman without knowing if they were being watched or not. The idea of an all-seeing, omnipresent eye is chilling at a basic level.

This leads to my second observation, that is, how should we think about control of the information that is known about us? Again, to quote Timothy Garton Ash, who spoke here not so long ago—and some of you may have been present for that—he says, "A basic principle must be that my data remains mine, not yours to data-mine. Citizens should have what the Germans call 'informational self-determination.'" An advocacy group called Privacy International puts it this way: "Privacy is the right to control who knows what about you and under what conditions."

As we have seen the arguments over the so-called "right to be forgotten," that is, the right to be "off the grid," so to speak, there are deep-seated cultural differences over what this right entails. Debates are ongoing as to what exactly constitutes the conditions under which a right to know outweighs the right to privacy.

Finally, it is important to remember that we are not without moral or legal compass when it comes to the idea of privacy as a right and as a condition of well-being. For all of the novelty of our current situation, we should probably be skeptical of the idea that it is unprecedented.

For example, Timothy Garton Ash reminds us that in 1890 a Law Review article written by Samuel Warren and Louis Brandeis was titled, "The Right to Privacy." It laid out principles that remain relevant to this day, including the idea that there should be protection against intrusion into private affairs and public disclosure of embarrassing facts.

How we develop and adapt ethical principles around privacy in the Internet age is an open question. Our study group that is with us today with its focus on libraries may have a special contribution to make, and we believe that they do. Libraries have been important norm setters for community engagement. We believe they will continue to play this norm-setting role well into the future.

Privacy is a fundamental human value. The stakes are high, and the conversation is important. This is a big agenda, I know, but if not us, then who? Fortunately we have great speakers here today to lead the way, and I'm going to turn it over to them.

I want to thank you all for coming, and now I will turn it over to my colleague, Albert Tucker.

Thank you very much.

ALBERT TUCKER:Thank you, Joel. Good afternoon, and thank you so much for welcoming us here in New York in this wonderful space, and thanks to our colleagues, Joel, and colleagues at the Carnegie Council for hosting us. We've been taking in this wonderful city of yours—of ours, I have to say, we've claimed ownership as well—having been looking and walking around the city and visiting the museums and discussing this issue.

At the Carnegie UK Trust, which is one of the family of Carnegie organizations, we very much work toward an evidence-based approach to change. We try to understand impartially and without prejudice what the situation is, try to empower citizens, try to get people to really think about the issues, but also to try to understand feelings. Weirdly, in the policy world people don't talk about feelings too much; how are people feeling about things, and will that help us change. We also put a great deal of store in learning from others, the people who think about these things, the people who work with them, the people who are activists in these areas that we are interested in changing and empowering citizens in. So this is a part of that process.

There are 12 of us from across the pond here and from across the United Kingdom, representing different aspects of the United Kingdom, different aspects of the world, different skills in this area. But also not just in this area—we have a much broader frame, in fact. Probably the person who least knows about how libraries function is myself, but actually the task at hand is critical going forward, I think.

I will just quickly say who we are. The fact that you would all come and share a room with a gang of British people coming to talk about secrecy and privacy and data protection is a testament to your interest in the issue as well.

Just so you know who the people are in the room, I think these are some of the leading thought leaders in this area, and we are hoping that from understanding this more we can have a much broader reach with this group of people who have the rich influence, ability, and energy to actually make a difference, and together hopefully, and from what we learn from people like yourselves, we can take this forward.

We have people who have responsibilities for library networks, people who advise government and institutions on policy; we have key members of the Carnegie team who are going to take this forward; we have people who are also working on consumers and how they engage with libraries in the digital world; we have people who are going to influence all kinds of IT and digital technology, people who run community-based libraries independently and try to make that really responsive to consumers, those who are challenged about how libraries really listen to the people who use them and what future role they can play. So we cover a wide range.

But just quickly to say who we are here, and just so I don't miss anybody, we've got Martyn Wade—if you could just stand up and give a little wave so people know who you are. I won't tell you too much, just catch up with people when you want to.

We've got Trisha Ward who is here with us. We've got Niall over there; Catherine Stihler, who is an MEP, a Member of the European Parliament, and really has been a supporter of the Trust and in our work with libraries; Ryan Meade; Ciara, who you are going to hear from later; Aude Charillon; Brian Ashley; and Will Perrin.

We really have been trying to look and understand the issues as a team here. We also have the two people who keep us in order and make sure we actually meet the brief and not enjoy New York too much. We've got Douglas White from the Trust, and Anna, who has been really organizing everything for us.

So thank you very much for having us here.

The format, Joel informs me, is slightly different than the usual format here, so maybe I should explain that a little. Ciara is going to tell us a little bit about the experience in the United Kingdom, just to give us a sense of the points of difference later when we reflect. Then I think we are very privileged to have Bruce Schneier here, who is going to talk to us from his experience of this, and I want to hear what that is going to be about and which directions he is going to take us in.

Deborah is here with us and will be responding to what we hear. Then we will have questions and answers, which we really would like for you to look at this issue around the ethics of it, the differences between our different countries, and indeed if we understand that clearly, what some of the challenges are, some pointers for the future, and food for thought. I'm a keen one for food for thought. Are there things we should be thinking about going forward from your experiences and your areas of expertise? That will be most helpful.

As I've said, for us this is really important, the exchange of information, the sharing and meeting you all, particularly at a friend's house. That's a great one.

Without any further ado, I'll ask Ciara to come and tell us a little bit from her perspective on the issue.

CIARA EASTELL: I'm Ciara Eastell. Hello, everybody.

What I'm hoping to do in just the next five-to-ten minutes is give you a very quick overview of the position for libraries in the United Kingdom. Given the time constraints, I'm not aiming to be completely comprehensive, so I trust that you'll trust me to give you the highlights. My colleagues from the United Kingdom, any errors or omissions are my own.

What I'm hoping to do is to give you a flavor of the kind of multidimensional position in the United Kingdom in terms of libraries and this issue, because it is not simple or straightforward. So I'm going to talk through the different stakeholders in this space.

First of all, I want to start with the perspective of libraries' most important asset: our staff. They are delivering services directly to the public across the United Kingdom in over 4,000 public libraries, and it is our staff really that is helping people within their communities navigate these issues around data and privacy on a very regular basis, day in, day out.

Through our network of free public Internet provision, free Wi-Fi, and fixed PCs in libraries, the public library has become the place of first resort and last resort for citizens who need access to online services. Where once people would have gone to the jobs center on the High Street to look for work, they now come to the library to complete their required 35 hours a week online searching that they need to do. If they cannot demonstrate that they are doing 35 hours a week online searching, they risk losing their benefits. Where do they go? The library.

Where once people would have gone to the housing office in their local community to look for council or social housing, they now come to the library to browse available properties, of which there are often not very many, and bid for the best ones available.

The cutbacks that we've seen across all public services in the United Kingdom over the past five years mean that increasingly libraries are the only civic space within communities for those seeking information and support. Against that backdrop, our staff is performing an incredibly important but often invisible job in helping those people to access government services.

I think in doing this they are carefully navigating issues around privacy and data. They are often serving very vulnerable people who lack literacy skills, sometimes with mental health issues, who perhaps unsurprisingly often need fairly significant support in terms of setting up e-mail addresses, creating passwords, and generally engaging in the online civic space, where sometimes usability and accessibility of online services is not as good as it should be.

I am fairly confident from my own experience within my own team in Devon that our staff working across libraries in the United Kingdom are very conscious of these privacy issues, though I say that without any solid evidence. We don't specifically train staff to address these issues in any detail, and as far as I'm aware there are few, if any, privacy policies in existence in UK public libraries.

Turning now to what is happening at a library leadership level in the United Kingdom, the Society of Chief Librarians, otherwise known as SCL—catchy title—we are the body that represents heads of library services in England, Wales, and Northern Ireland. We have been working at a national level, particularly within England, since 2013 to develop a series of universal offers around the five key areas of public library provision: reading, learning, information, digital, and health, with a further offer around culture being launched shortly.

We have used the framework of the universal offers to enable us to develop national partnerships that can be levered for benefit at a local level, to advocate the reach and impact of public libraries, to lever more investment into service development, to drive innovation and aspiration, and to train our staff in the skills of a 21st century library service. Specifically in relation to the issue that we are talking about today, our universal information offer has been particularly successful in developing a clear sense of what the key issues are for supporting people to gain access to online services. In 2014 over 80 percent of England's public library workforce—thousands of people—participated in online learning to develop their digital skills so they are better able to support library customers.

In 2016, just last year, SCL secured a place on the Government Digital Services Framework for Assisted Digital and Digital Inclusion Services—very snappy title, I think you'll agree. That means that as individual government departments move their services online they should use the framework to tender for support so that barriers around disability or literacy should not prevent people being able to access those services.

At SCL we work very hard to sign up the 151 different library services across England to this framework. That had never been done before, but the carrot for all library services was that for the first time, library services might get paid for what they had previously been doing for free.

Just as an example of that, the library service on the Isle of Wight—a very small library service—has recently undertaken a pilot as part of the framework to test out new approaches to the census. As the next census in 2021 will be done entirely online, which I believe is the same here, could this be a real opportunity for libraries to be center stage for the work around the census in a few years' time?

Our universal learning offer has focused on developing the skills to help our staff shape a much more engaging, lively, dynamic digital offering. We have developed a resource called Code Green that encourages innovation around hackathons, Raspberry Pis, maker spaces, all sorts of engagement. Within the universal digital offer, SCL has pioneered a digital leadership program supporting existing and up-and-coming leaders with the skills and confidence to lead in the digital space. Issues such as how library leaders should be shaping the marketplace in terms of procurement have been amongst the topics discussed by those digital leaders.

We have established a National Innovation Network so that best practice and ideas around digital services can be shared easily within the network. We've shaped an initiative called Access to Research with academic publishers so that people using our libraries have access to reams and reams of high-quality academic research, which they previously could only access through a university, and they can do it for free in their local library.

So there is much that SCL has progressed at a national level that is relevant to our discussions about data privacy, much that could provide some of the building blocks that could help us take back the conversations from our study trip and make things happen.

But I have to say that the issue of data privacy is not one that ranks highly on the list of library leaders today. Too often the brains of library leaders—people like myself leading a library service, Niall leading a library service—are preoccupied by austerity and the need to cut significant sums of money from the library budget. These discussions can become all-consuming and get in the way of broader and important discussions about the role and impact of libraries within communities.

Other issues—just briefly, to give you a flavor—that I think are pertinent in this space relate to the changing governance structures within UK public libraries. We are seeing increasingly that libraries are being handed over to volunteers and run by volunteers. What guidance and support are those volunteers being given around data privacy? I'm not sure that we know.

In organizations like my own, which are now operating as spin-outs from the local authority in pursuit of a much broader base of income, operating models from the commercial sector, will there be more of an expectation from our boards, from our funders, to look at how we commercialize our own data?

More positively, how can we use our data to shape new services using the techniques of the private sector to provide better, more targeted services for our existing and potential users, an issue that Carnegie picked up recently in its Shining a Light report on UK public libraries?

Finally, there are reasons to be cheerful. We have some emerging good practice. We have Aude Charillon, who is here with us on this trip, who is almost single-handedly showing us some new possibilities. She has hosted CryptoParties in Newcastle libraries and is gradually growing a public debate in her city about the potential of libraries around privacy.

We are seeing a growing interest in open and big data with services, like my own, being much more interested in sharing our data sets with others, and are overlaying them with other data sets, such as those around deprivation. We are doing it with an open mind—we don't know where it is going to take us—seeing what might come, but we are hoping, certainly in Devon, that the act of sharing our data itself will begin to open up new dialogues about the role that libraries play and the social impact that they create in communities.

At a national level, we have The Library and Information Association (CILIP), our professional body, actively shaping this space and collaborating with Carnegie and others to push the agenda forward.

In summary, our experience in the United Kingdom tells us that our communities, particularly those that are most marginalized and potentially disenfranchised, are in need of support from us on this issue. We know too that our workforce are already responding positively to those needs and helping their users navigate sensitive issues around data privacy. We know that they need more practical support in terms of both training and privacy policies to provide a framework for that public engagement.

As library leaders we are confident that we have many of the building blocks in place through the universal offers and our national partnerships to take forward this agenda, and we know that we can tap into the expertise and support of national organizations like our professional body, CILIP, the Carnegie Trust, and our other non-library colleagues on this trip who have much knowledge to share.

I think it's fair to say that this week in New York we are learning huge amounts. I have learnt an awful lot which will help us connect up the conversation back in the United Kingdom, and we look forward to learning more in this afternoon's seminar that will enable us, as library leaders, to take forward libraries' really important role as educators, conveners, data processors, and purchasers in the area of data privacy.

I think the issue strikes at the heart of our professional DNA as librarians. It is what motivates most of us to keep working in libraries, that sense that we are here to ensure the access to information for everybody. I know, speaking for my colleagues, I think, from the United Kingdom, we really look forward to exciting new possibilities in our library policy and practice inspired by this trip.

Thank you.

ALBERT TUCKER:The report that Ciara mentioned, Shining the Light, is a report that the Trust has done looking at the use of libraries.We found in that report that actually libraries are still very valued in our context, that young people are using libraries more—which I personally didn't think was the case—and also that people are using libraries and wanting to use libraries for more things. We also realized that people didn't know enough, and people didn't understand enough how best to use them. Some of the things people were asking for, some of my colleagues here are already introducing in their environment. But people don't know enough about what is available.

We also are hearing more about the convening power of libraries in our areas from that report. Yesterday we found out at the New York Public Library that libraries are being asked by the city to actually educate the citizens on data protection and privacy. For us that was a really interesting move about the role of libraries as educators, not just as a knowledge base, but as educators and as thought leaders in this whole issue of data protection and privacy.

From that point, I come to Bruce, who my notes tell me is an internationally renowned security guru, so I can't wait. Bruce?

BRUCE SCHNEIER: Thank you. Thanks for having me. Thanks to the UK colleagues for inviting me.

I grew up in New York, so it's always nice to be back. I want to give people who are visiting from the United Kingdom a little advice. Those cookies in the center, the round ones? Those are called black-and-whites. When I was a kid, they were much bigger. The way you eat them is you take the cookie, break it in half, separating the black from the white, eat the chocolate, and then leave the other half for your sister. [Laughter] Always works for me.

Joel talked a bit about the things around us in the day-to-day collect. I think it is worth talking about what is happening and why.

The fundamental truth is that everything we do that involves a computer creates a transaction record, some data about the transaction, whether that is browsing the Internet, using or even carrying a cellphone, making a purchase either online or in person with a credit card, walking by any Internet of things (IoT) sensors, saying something around an Alexa that is turned on, walking past any of the millions of security cameras in this city, in London, everywhere. All of those things produce a record of what happened.

Data is a byproduct of any socialization we do in the information society: phone calls, e-mails, text messages, Instagram posts, Facebook chatter. This is all data. This data is increasingly stored and increasingly searchable. This is just Moore's Law in operation—data storage gets cheaper, data processing gets cheaper, and things that we used to throw away we now save.

Think of e-mail. Back in the early days of e-mail I would carefully curate my mailboxes. I had hundreds of mailboxes. I figured out what to save, what to throw away. I looked back recently. In 2006 I stopped doing that. Before 2006, hundreds of e-mail boxes; after 2006, I had seven. Because for me, for e-mail in 2006, search became easier than sort.

That threshold has largely been crossed with pretty much everything. It is much easier to save everything than to figure out what to save. The result is that we are leaving this digital exhaust as we go through our lives. This is not a question of malice on anybody's part, this is simply how computers work.

This change has brought with it a lot of other changes. Our nature of using data has changed. It used to be we would save data for historical reasons, to remember what we said and what we did, to verify our actions to some third party, to let future generations know what we thought.

Increasingly data now drives future decision-making. That is the promise of big data, that is what machine learning does, and I'll talk about that later.

This data is surveillance data. The phrase you might remember from the early months of the Snowden documents was the term "metadata." Metadata is data about data; it is data the system needs to operate. For a cellphone call, the data is the conversation I'm having; the metadata is my number, the other person's number, the date, the time, our locations, the call duration. For e-mail, the data is the message I'm typing; the metadata is the from, the to, the routing, the timestamp. We can argue about the subject line.

Metadata is surveillance data. In those early months of the Snowden documents, President Obama said, "Don't worry, it's only metadata." But let me give you a little thought experiment. Imagine I hired a detective to eavesdrop on you. That detective would put a bug in your home, in your office, in your car, and collect the data, the conversations. The National Security Agency (NSA) at that point said, "We're not collecting that." If that same detective put you under surveillance, he'd produce a different report—where you went, what you did, who you spoke to, what you purchased, what you looked at. That is surveillance; that's metadata.

Metadata is actually much more important than conversational data. It tracks relationships, it tracks associations, it shows what we're interested in, who is important to us. It fundamentally reveals who we are. Nobody ever lies to their search engine. It is also easier to store, to search, and to analyze.

Right now we are in the golden age of surveillance. We are under more surveillance than anybody else in human history, by a lot. And it has some interesting characteristics. It's incidental. It is a side effect of all of the things that we want to do. It is covert. You don't see the approximately 100 Internet trackers that are tracking what you are doing as you browse. If they were standing behind you, you'd say, "go away, I'm doing something private." But they're in your computer. They're hidden, they're covert.

You didn't see most of the cameras when you walked here. They're there. It's hard to opt out of. It is very hard not to be under this surveillance. I can't not use a credit card. I can choose not to use Gmail, to say, "I don't want Google having my e-mail, so I'm not going to use Gmail." And I don't. But last time I checked, Google has about two-thirds of my e-mail because you all do, and it is ubiquitous. It is happening to all of us everywhere because everything is computerized.

Ubiquitous surveillance is fundamentally different. We know what surveillance is like. We see it on television. It's "follow that car." But ubiquitous surveillance is "follow every car," and when you can follow every car you can do different things. You can, for example, do surveillance backward in time. If you have the data, you can follow that car last week, last month, last year, as big as your database is.

You can do things like hop searches and about searches. They were in the news recently. These first showed up in 2014, but there was a new change in NSA procedures about these. Hop searches are where I have you under surveillance, so I'm going to always listen to everyone you talk to, everyone they talk to, and everyone they talk to. I remember the phrase "three hops." It was really big in the United States in those early Snowden months. That's three hops. The goal is to find conspiracies. What you actually learn is everyone orders pizza sooner or later.

Or about searches. Don't search on the name, don't put the name under surveillance, look for people using this word, using this phrase, talking about this topic. And you can't do that unless you have everybody under surveillance. Find me someone who meets certain surveillance characteristics. I want to know everybody who has been at this location at this time, this location at that time, that location at that time. I have three location timestamps. I can find that.

A couple of really clever things that came out of the Snowden documents that the NSA is doing. They have a program—I'm not making this up; it's so cool—that looks for cellphones moving toward each other, turn themselves off, and then turn themselves on again about an hour later moving away from each other. They're looking for secret meetings. They look for phones that are used for a while, then turned off, and then find other phones that are turned on for the first time geographically nearby right after. If you watch The Wire, you know what they're looking for; they're looking for burner phones.

They have a program where they know the phone numbers of U.S. agents around the world and look for other phones that tend to be near them more often than chance, looking for tails. You can go on in the things you can figure out here.

This is mostly done by computers. These are inferences. I was listening to Albert talk about coming to New York and looking around with this delegation of people. It sounds like he's casing the joint, and that is what is going to show up in the data. It is computers doing this, and people see the results the computers spit out.

This data is largely being collected by corporations. All the data we're talking about, it's not government data; it's corporate data. Surveillance is the business model of the Internet. We build systems that spy on people in exchange for services. You all know that the reason Facebook is free is because you're not the customer, you're the product. You are the product Facebook sells to their customers.

This business model did not have to emerge. It really emerged for efficiency reasons. In the early days of search, of e-mail, of social networking, there wasn't any obvious way to charge. You couldn't really make micropayments. And people expected the Internet to be free, so advertising showed up as the model which made the Internet work.

On the side, in the United States certainly, you had a huge data broker industry that came out of mailing lists and mail order; companies that made their living slicing and dicing the population to small, targeted segments that you can send direct mail to. They had large databases of personal information. Those two combined into sites that make their business spying on us. That is Google, that is Facebook, it is Microsoft, and it is Uber. And they are the world's most valuable companies. Companies that have our data are more valuable than companies that make the stuff that we buy. Always remember, if it's not obvious that you're the customer, you're the product.

The drivers here, the reason we have this, is that it's free and convenient. That is what we want. To be sure, this data is collected for the purpose of psychological manipulation. We call it advertising. It is now very exact and very personalized. We are reading about how it affected both Brexit and our election. It is propaganda. It's personalized ads, personalized offers, personalized manipulation in ways that would be impossible otherwise, (1) because we wouldn't have the data, and (2) we couldn't do the targeting. On the Internet you can target individually in a way you can't with television or billboards or radio.

So the personal data is valued enough to make Google, Amazon, Facebook, Uber—Tesla is more valuable than General Motors (GM) because they have data that GM doesn't. It doesn't matter that they sell one-thousandth of the cars. Corporations know an amazing amount about us. This is a perfect surveillance device. This knows where I am at all times. It knows where I live, it knows where I work, it knows when I go to bed, when I wake up. We all have one, so it knows who I sleep with. And it has to. Otherwise, it won't work.

I used to say that Google knows more about me than my wife does. That is certainly true, but it doesn't go far enough. I think Google knows more about me than I do, because it remembers better.

Who knows that you are here? Your cellphone company certain does. If you used Google Maps to get here, Google does. A bunch of smartphone apps collect your location data—if you took an Uber to get here, you made a credit card purchase nearby, used an ATM machine, security cameras again, license plate-scanning companies—and they're making inferences.

We know a lot about this. Facebook knows you're gay even if you don't tell Facebook. There was a great article in The New York Times six years ago or so about Target Corporation, a big retailer here, that knew when someone was pregnant before her father did, because it is able to make these inferences. It is not always right, but for advertising you don't have to be right all the time; you have to be right most of the time.

Government surveillance has largely piggybacked on all these capabilities. It is not that the NSA woke up one morning and decided to spy on everybody. They woke up one morning and said, "Wow, corporate America is spying on everybody. Let's just get ourselves a copy." And that's what they do.

This allows governments—United States, United Kingdom, and everywhere else—to get away with a level of surveillance we would never allow otherwise. If the government said, "You must carry a tracking device at all times," you would rebel. Yet we put a cellphone in our pocket every morning without thinking. Or if we have to alert the police when we make a new friend—you can imagine a regulation like that. You laugh, but you all alert Facebook. Or if you have to give the police a copy of your correspondence; you never would, but you give it to Google.

Just as corporate surveillance is based on free and convenient, government surveillance is largely based on fear—fear of criminals and terrorists in our countries, fear of dissidence and new ideas in other countries. Ubiquitous surveillance turned out to be a very, very useful form of social control.

There are differences here, and we're seeing it in the politics right now of surveillance for intelligence purposes and surveillance for law enforcement purposes. Intelligence purposes are more about metadata and flows and trends and relationships; law enforcement tends to be more about "I need to know what's in this iPhone right now. I have a person here. He's a suspect. I want to convict him. I need his iPhone." Which you don't really see in the intelligence community. That is more about "give me a whole bunch of data and I can figure some stuff out." But that is more in the noise.

Really we have a public-private surveillance partnership. Fundamentally both governments and corporations want this ubiquitous surveillance for their own reasons. A lot of data flows back and forth. And there are some breaks in this. You're seeing corporations fight government a little bit around the edges, very publicly because it's a good public relations (PR) move, but largely the interests are aligned. It is power against us.

Google wants you very much to have privacy from everyone except them. Just like the Federal Bureau of Investigation (FBI) will say that, "We want you to have privacy, just not from us." It doesn't actually work that way.

Let's talk about why this matters. I think in an audience like this it's obvious, but I want to enumerate some of the reasons. There are very profound implications for political liberty and justice. There are people being accused by data, and data being used as evidence. Again, it is no big deal if it's for advertising. I get shown an ad for a Chevy I don't want to buy. Put it in a different context and someone drops a drone on my house.

There is an enormous amount of self-censorship in communities that are affected by this kind of surveillance. This inhibits dissent; it inhibits social change. It is ripe for abuse. There are matters of commercial fairness and equality, that we are seeing surveillance-based discrimination, surveillance-based manipulation. We are seeing our data being exposed when the third parties that have it have privacy breaches.

This matters for reasons of business competitiveness. Companies operating in countries with this kind of surveillance are being hurt in the market. We're seeing United States versus European Union; the European Union has much more stringent privacy rules than the United States does, affecting U.S. companies.

This affects us for security. The infrastructure of surveillance hurts our security. There was an article today that the U.S. Senate is for the first time allowing Signal to be used by Senate staffers. This is Signal. This is the program they called "evil" because people were using it to protect their privacy. They finally realized that, "Wait, we need to protect our privacy" because there are security implications for having all this surveillance data available to anybody; anybody who can buy it, anybody can steal it.

This, of course, affects privacy. Fundamentally, deep down it affects how we present ourselves to the world. It affects our autonomy as human beings, and by extension our selves, our liberty, our society.

How do we fix this? I actually wrote a book on this. It is called Data and Goliath: The Hidden Battles to Collect Your Data and Control Your World. I spent a lot of time on how to fix this.

The first thing is to recognize that we need security and privacy. You often hear this discussed as a trade-off, security versus privacy, as if those under constant surveillance might feel more secure because of it. But I think we need both. Privacy is a part of security, security is a part of privacy.

More important, we need to prioritize security over surveillance, like the U.S. Senate just did. We live in an infrastructure which is highly computerized. Either we can build it for surveillance, which allows the FBI and the Russians to get at our data, or we can build it for security and allow neither.

Another principle is transparency. There is a lot of secrecy in this world. One of the lessons I think we've learned post-Snowden is that secret laws have failed, that secrecy means there is no robust debate in our society about this either on the government side or on the corporate side.

We need more corporate transparency as well. If you're interested, just look at the really impressive series of investigative reports The New York Times published on Uber and the things they're doing with our data. It is pretty scary. It is things that are online with some of those NSA programs I talked about. They were able, using the Uber data they had, to identify regulators—because they would tend to hail Ubers near government buildings—and they would show them a different, more legal version of Uber that we normal customers wouldn't see. They actually had a name for the program that was surprisingly benign.

So transparency, but that's not enough. You need oversight and accountability and some methods to deal with these issues, because an important principle, which I call "one world, one network, one answer," is that we just don't know how to build a world where some people can spy and some people can't.

The FBI very much wants into your iPhone. Not all the time, just in case they think you're doing something. But they'll say, "We don't want a backdoor, we just want an ability to get in that only we can use and someone else can't." But I don't know how to do that. I can't build an access mechanism into this device that only operates when there is a legal warrant sitting next to it. I can't make a technical capability function differently in the presence of a legal document; either I make this secure or I make this not secure. Then I have to build some kind of social system to try, to hope, that only the FBI uses it and the Russians or the criminals don't. I don't know how to do that. So it is either security for everyone or security for no one.

I assume people are paying attention to the big ransomware attacks of, I guess, about a week now. The latest rumor is that North Korea is behind it. I don't know if you heard that. That is not my guess. My guess was, and still remains, cybercriminals, but could be North Korea. It's true. We actually don't know—and this is an amazing thing—whether this attack that is affecting hundreds of thousands of computers around the world is the result of a nation-state with a $20 billion military budget or a couple of guys in a basement somewhere. I truly don't know, and it could equally be either. That is the world of this democratization of tactics.

The vulnerability that is causing that is a vulnerability that the NSA knew about at least five years ago, kept secret instead of telling Microsoft so they could fix it, was using it to spy on people, but they don't get to be the only ones. It turns out someone stole that vulnerability from them in, we think, 2015—we're not exactly sure—published it in March. The NSA actually realized it was stolen between when it was stolen and March, told Microsoft about it, I think, in January, and Microsoft fixed it in February, but a lot of you didn't install your patches, and that is bad.

But this is complicated. The NSA can't keep that vulnerability just to themselves. They can keep it secret so anybody who knows about it can use it, or they can make it public so that everybody can protect themselves.

One more quick story. Anybody heard the term "stingray"? Stingray is an International Mobile Subscriber Identity-catcher (IMSI-catcher). Basically it's a fake cellphone tower. The FBI uses these to figure out who is in an area. They will take one of these stingrays, they'll set it up, and because cellphones are dumb and promiscuous, they will connect to anybody that claims they're a cellphone tower. That's the way the system was built, very insecure. They'll put up a stingray, all your phones will connect to it, and now they know everybody who is here.

This was really secret technology when it was developed. The FBI would—I am not making this up—drop court cases rather than let evidence about stingrays appear in public proceedings. When there was a Freedom of Information Act (FOIA) request in Florida to get some stingray data, federal marshals swooped in and took the data before it could be given away.

It turns out, though, this actually isn't very secret. About two years ago, a web magazine—I think it was VICE—went around Washington, DC, looking for stingrays and found dozens of them operated by who-knows-who around government buildings, embassies. You can now, if you want, go on Alibaba.com and buy yourself a stingray IMSI-catcher for about $1,000. Everyone gets to use them, or nobody gets to use them.

Solutions here are very complicated. They are political, legal, technical. We always knew that technology can subvert law, but we also learned that law can subvert technology, and we need both working together.

We don't have time for concrete proposals—there are a bunch in my book—but really social change needs to happen first. None of this will change until (1) we get over fear, and (2) we value privacy.

This is hard. There are a lot of studies that show that we value privacy greatly when it comes down to the point of purchase. We would gladly give up our privacy for one-ten-thousandth of a free trip to Hawaii, or the Canary Islands for you other guys. This has to be a political issue. It was not in the last U.S. election. I doubt it is in the current British election—I haven't been paying that much attention.

But there is a real fundamental quandary here, and that is: How do we design systems that benefit society as a whole while at the same time protecting people individually? I actually think this is the fundamental issue of the information age. Our data together has enormous value to us collectively; our data apart has enormous value to us individually. Is data in the group interest or is data in the individual interest? It is the social benefit of big data versus the individual risks of personal data.

This problem shows up again and again, like movement data. I landed at LaGuardia this morning. Traffic was miserable. My taxi driver used a program called Waze. If you know Waze, Waze is a navigation program that gives you real-time information on traffic data. How do they get that data? Because everybody who uses Waze is under surveillance and they know how fast you're going. Traffic was miserable. That program got me here. It got me here earlier. It was very valuable, at the expense of being under surveillance.

Advertising. I actually like it when I go on Amazon and I get suggestions of books I might want to read based on books I've read. That is valuable to me. And, of course, it's valuable to Amazon, at the expense of a company that has the list of books that I've purchased.

This is the bargain that the NSA and Government Communications Headquarters (GCHQ) and FBI, all these organizations, say to us: "We want your surveillance data, and we'll protect you from terrorism, from crime."

Lastly, medical data. I think there is enormous value in all of us—in the country, in the world—taking our medical data and putting it in one big database and letting researchers at it. I think there are amazing discoveries to be learned from that kind of data set. On the other hand, yikes! That is really personal. How do we make that work—data in the group interest versus data in the individual interest?

I started by saying that data is a byproduct of the information age. I'll go one step further: I think data is the pollution problem of the information age. Kind of interesting saying that here at a center built by Carnegie. If you think about it, all processes produce it. It stays around. It is festering.

How we deal with it, how we reuse it, recycle it, who has access to it, how we dispose of it, what laws regulate it, that is central to how the information age functions. I truly believe that just as we here today look back at those early decades of the industrial age and marvel at how the titans of that age could ignore pollution in their rush to build the industrial age, that our grandchildren will look back at us here today in the early decades of the information age, and they are going to judge us on how we dealt with data and the problems resulting from that.

Thank you.

ALBERT TUCKER:Thank you so much, Bruce.

My takeaway from that is just your last phrase, "Data is the pollution of the digital age." I'll have to think about that one a little to get my head around that.

So if I can just ask: I think one of the things for us, for this study trip, is also how do we in our work with libraries and the role of the libraries think about the issues you've raised for us? Thank you very much.

Can I ask Deborah Caldwell-Stone, who is deputy director of the Office for Intellectual Freedom in the American Library Association (ALA), to give us something of a response to that?

DEBORAH CALDWELL-STONE: Good afternoon, everyone. Thank you for the invitation to be with you here today.

I want to look at this from the perspective of American libraries, which have a deep tradition of defending individual privacy when they provide services to library users. What I would like to say is that if surveillance is the business model of the Internet, then public libraries are well placed to function as an intermediary and a defense against surveillance, at least at the individual level. Their role is as an educator, as an institution for empowerment for the individual who wants to make choices about what happens to their data and what kind of surveillance they are subject to.

Consider: 100 percent of the public libraries in the United States offer access to the Internet and provide their communities with essential links to e-government, employment, educational services, and resources. Adding to this, librarians are technology leaders here in the United States. We are a profession that used digital technology for circulation systems, online catalogs, and information databases long before the rise of the Internet and applied privacy principles in the use of that technology. Some of the most knowledgeable experts in the secure use of digital resources and networked and online technologies are librarians.

Consider that protecting patron privacy has long been at the center of the mission of the American Library Association and the library profession. As early as 1939, librarians affirmed a right to privacy for library users, asserting that privacy was a necessary condition for the free and full exercise of the right to read and receive ideas. Librarians understand that a lack of privacy in one's reading and intellectual activities inevitably chills inquiry, damaging both individual rights and the democracy that supports those rights. So they have continued to zealously adhere to the professional commitment to protect user privacy.

Librarians have protected these rights in small, quiet ways in their day-to-day work, for example, shredding computer sign-up sheets at the end of the day, enabling anonymous logins to informational databases, or even setting up what they call "warrant canaries"; setting up signs in the windows saying, "The FBI hasn't been here today" to let people know that they can come into the library with the knowledge that no one is asking for records.

They also have worked to protect privacy in more public ways, by opposing warrantless surveillance under the Patriot Act or going to court to challenge government demands for library records. When the pervasive use of digital content, social media, and search engines expanded the collection and use of patrons' personal data, librarians similarly shifted their attention to the privacy challenges posed by commercial online services.

Witness ALA's public protest of Adobe Digital Editions' practice of collecting and transmitting large amounts of unencrypted data about the readers who used its e-book platforms. ALA went out, we marshalled support among the members of the association, we filed a protest, we went public, and ALA soon persuaded Adobe to encrypt the data transmissions to protect the privacy of those readers.

Consider too that libraries are trusted community institutions that offer confidential information services in a non-commercial atmosphere—a real rarity today. The Pew Research Center, which has conducted nearly a decade's worth of surveys of library users, today summarizes the results of its research by simply stating that people love their libraries and trust their local library. They see the library as a safe space for unobserved and private information seeking. There is an issue with that belief, but we'll set that aside for now.

According to the most recent Pew surveys, a majority of Americans look to their local library for information about technology, with over half of those surveyed agreeing that libraries contribute a lot to their communities by providing a trusted place for people to learn about new technology. More important, a majority of those—80 percent—say that libraries should definitely offer programs to teach people, including kids and senior citizens, how to use digital tools like computers and smartphones and how to secure the privacy of their data.

In fact, libraries are already deeply involved in providing their communities with instruction in digital literacy. In the most recent public libraries and Internet survey conducted by the Institute of Museum and Library Sciences (IMLS) here in the United States, over 80 percent of libraries report that they offer classes on general Internet use, general computer skills, and point-of-use technology training to their patrons.

Again, if surveillance is the business model of the Internet, public libraries are well placed—they are in position—to serve as an intermediary and a defense against surveillance. To achieve this, libraries are doing many things, but the real focus is on education, developing classes and learning opportunities that assist patrons in making good decisions about protecting their privacy.

A great example of this here in the United States is the San Jose Public Library's Virtual Privacy Lab. I don't know how many of you have heard of it, but in that case the San Jose librarians and local privacy experts from Berkeley created an online interactive game for patrons to use to become privacy-literate.

Users can generate a custom privacy toolkit when they visit the library's website, a toolkit that is geared toward their unique privacy needs, because the library has accepted the fact that everyone's privacy needs are different. Some people want to be more social and open, others want to lock down their data. This toolkit is customized to the extent that they will offer a toolkit to each pole of privacy needs. To broaden its outreach the library has even had the website professionally translated into Vietnamese and Spanish, the two primary immigrant populations in the service catch of that library.

Other libraries are installing privacy self-defense tools on their public computers and offering classes to the community on how to use these secure technologies to take more control over their personal information and online identity. Some examples of these types of tools are Privacy Badger, which allows the user to see and block ad and social media trackers to the best extent that they can; HTTPS Everywhere, which encrypts and secures a person's individual web browsing. There is also DuckDuckGo, a no-track search engine, and the Tor Browser, which you must know, enables secure anonymous web browsing.

Using these tools libraries are giving control back to the user. They are trying to serve their patrons in the best way they know how and offer them the choice to try to evade surveillance as best they can. It is not perfect, but it does do the job of making those tools available to them and, more important, making them available on the library's own computers. It does no good to teach somebody how to use the Tor Browser and not put the Tor Browser on the library's computer.

Libraries and librarians are developing initiatives to foster greater awareness of privacy and privacy-current concerns and tactics to defend privacy for librarians themselves. It is an interesting fact that when we went out to look at what libraries were doing around privacy, we found a real gap in knowledge about what privacy concerns were and what tools individual staff, line librarians, had available to them.

In regard to this, the ALA runs an initiative that you may have heard of called Choose Privacy Week. To be blunt, it is very much modeled on our Banned Books Week, only in regard to privacy. We want to raise awareness of privacy issues, we want to engage the public in a conversation about this, we want to involve the entire library profession in the United States in a dialogue about what we can do to best defend privacy in the library and in the wider world.

We started Choose Privacy Week as a flat-out campaign against government surveillance and monitoring. It was spurred on by the Patriot Act, of course, and the discovery of other government tracking and surveillance programs. As we went along with Choose Privacy Week, we found that librarians themselves needed to be equipped with knowledge and tools to help their patrons defend their privacy and to institute better privacy practices in the libraries themselves. Choose Privacy Week has now evolved, and now it is not just focused on government surveillance, but it is also involved in equipping librarians, educating librarians, with the tools they need to better serve their communities with privacy protections.

There are two initiatives here in the United States right now that are doing similar work, equipping librarians to better defend patron privacy. One of them is the Library Freedom Project, which is run by a librarian, Alison Macrina, here in the United States, and she does privacy trainings that are very much focused on raising awareness of government surveillance and corporate surveillance, and what tools—for example, Tor Browser—that libraries can use to enhance privacy. The trainings are intended for librarians, and then the librarians are expected to take their training and train their individual patrons on these issues.

Another initiative—I don't know if you may have talked to some of these folks here in New York—is the Data Privacy Project at Brooklyn Public Library. They are doing great work in training New York librarians about data flows, privacy threats, and how they can be addressed so that those librarians and library workers who meet library users face to face on a daily basis are better equipped to serve the information needs of persons asking what they can do to protect their privacy.

I have to say that everything I've talked about so far is what I call "above the line" services. These are direct patron services and direct patron engagement. But there are "below-the-line" services that every library can start tackling in order to preserve patron privacy. In fact, they are a necessary part of preserving patron privacy. One of them is to engage in a process of auditing the library's own data collection and use, and to develop privacy and procedures that are privacy protective. I see from the tweet stream that you visited the New York Public Library. They are a prime example of an institution that has taken on this process.

Bill Marden has done an enormously wonderful job of tracking what data flows are going in and out of the library and what policies and procedures he needs to put into place to protect the privacy of the individual patron. This includes dealing with third-party vendors, who provide services and digital content to the library, and developing things like model contracts that incorporate things like privacy standards, and recognize the New York law that protects the privacy of patron data in the contract itself so that the vendor is bound to protect the data, not sell it to third parties, and not treat it in ways that they would treat commercial data at all.

The other things that libraries can do, and aren't doing here in the United States is, in fact, encrypt their own web presence and their own storage of data. ALA has embarked on its own project in regard to this. We have partnered with an organization called Let's Encrypt, which is a certificate authority that issues security certificates for encryption—which are a necessary part of the process—for free. There is no charge for this. They actually have automated the process of installing the certificates on the servers that are in use in the institutions that are seeking the encryption services. We are hoping by making certificates free and easy to install, that more and more libraries, even the smallest rural libraries, will be able to encrypt their web presences, and then again, better secure their users' library data.

Another part of what libraries do is advocacy. I've talked a little bit about this, but one of the major roles for ALA, one of the major parts of my job, is to advocate for privacy-protective law, to try to make those changes in society that are necessary to protect user privacy.

An example of this that there has been some success with are student data privacy laws. As you are aware, learning analytics are a big thing today, big data in education. We have found that entities like Google and other learning analytic companies are collecting the smallest details about K-through-12 students and storing it forever and then making it available for analysis. There needs to be some protections for this.

There has been a growing drumbeat from librarians and from educators for laws to protect this very sensitive data, and even try to forestall some of the collection that is going on. We have been in the forefront of going to state legislators and asking for student data protection. California has the model law, which is called Student Online Personal Information Protection Act (SOPIPA), and we continue to pursue such laws. In Missouri we persuaded the state legislators to amend what we called the "Library Privacy Confidentiality Act." In 48 states in the United States there is actually a law that defends patron privacy, and they have amended their law to impose a duty to protect patron data on the third-party vendors themselves so that there is a legal duty not to sell or disclose patron data.

We also, as you know, very obviously, have advocated for the reform or repeal of laws that allow the warrantless collection of user data, and we advocate against pervasive, nontargeted government surveillance such as Bruce talked about.

But the other work that we have been involved with is the promulgation of national standards, which is something that the United Kingdom may be interested in. Working under the sponsorship of the National Information Standards Organization (NISO), public school and academic librarians met with digital content publishers, library systems vendors, and academics. In other words, we sat down with the enemy, and we hashed out what was needed to protect patron privacy while allowing the vendors the data they needed to operate the digital content systems, the integrated library systems that libraries rely on to provide both content and service to their users.

These standards, published in 2015 as the NISO Consensus Principles on Users' Digital Privacy and Library Publisher and Software Provider Systems—yes, it was a consensus process—are the established standards for policy and practice that are intended to ensure patron privacy while allowing the provision of digital content and network services, and this balancing that is talked about often between the need to preserve privacy while allowing enough data to flow to provide the services.

This is just a small example of what we've been pursuing here in the United States in order to protect patron privacy as best we can. We are not able to solve the larger issues that Bruce talked about. We do participate in the advocacy to try to solve those issues, but where we are located in our communities, on the ground, working one on one with individual patrons, in the trenches, trying to help individual users to make the choices that they need to make about preserving their privacy.

Thank you.

QuestionsQUESTION: One question for Bruce, I think, and for whoever was throwing cryptoparties over there. My name is Nate Hill.

I run an organization called the Metropolitan New York Library Council, and we are just beginning a new program where we're designing a data privacy curriculum that we are going to roll out across the three public library systems in New York City.

One of the things that I am really thinking hard about with this is how to change the culture around privacy and security. It is very hard to talk about it for too long in the way that we've been talking about it without somebody saying something about a tinfoil hat or something like that. I'm wondering if anybody has any thoughts about how we can make this kind of thing more accessible, something that people readily want to join in on.

I'll give another example. The reason that I say the CryptoParty example is I was working at a public library a while back, through something like that with a Defcon group or something like that, and the first thing that happened is a bunch of people came in with their lock picks and tried to pick the server box in the library. So it was not this kind of welcoming thing that a lot of people wanted to come and join in on.

I am really interested in the culture around these issues. I think the Data Privacy Project and the Library Freedom Project are great, but I don't know that they have succeeded in making this a really widely inclusive effort. Do you guys have any thoughts on that?

BRUCE SCHNEIER:I talked a bit about that. This is actually a hard topic. It is hard to make privacy into something people care about, because like many fundamental rights, you don't notice it until it's gone. Like many abstract rights, they are easy to bargain away for a small benefit. If you offered a Big Mac for a DNA sample, you would have lines around the block because "A Big Mac is tasty, right? What are you talking about?" So it is a near-term gain versus potential long-term loss. I think we need to really reframe privacy as personal autonomy. Privacy is not about something to hide; privacy is about controlling how I present myself to the world. When I am under ubiquitous surveillance, I lose control over how I present myself to the world, and I become less than a fully autonomous human being.

I think those kinds of conversations make privacy less into tinfoil hat, the government is out to get me, and more that "this is how I navigate a complex world while retaining myself." But this is hard. It is hard for all of us. You're dealing with abstract losses.

It is the same problem we have with getting people to install patches against these computer vulnerabilities—or to floss. The immediate cost is tangible, the long-term benefit is abstract, and people are terrible at making those sorts of decisions. We don't buy life insurance.

DEBORAH CALDWELL-STONE: I would recommend getting in touch with the folks at San Jose Public Library.

QUESTIONER [Mr. Hill]: I know them. Yes, yes, it's great work.

DEBORAH CALDWELL-STONE: It's like that was their starting point, to say that this is a matter of autonomy and choice, and everyone has a different place where they're at with privacy and what they're willing to trade for and what they want to keep private. I really respect the work that they're doing, and we in ALA are trying to push this idea out to the larger profession, but it is a slow-going thing. Part of it is generational. I think we are going to have to see a generation of librarians retire, to be blunt about it.

I think that is what we are really going to have to do, is just do it. It is an incremental process of education, and we just need to have leaders in place who are determined to push this agenda forward. They are at least for the time being at ALA, and I know that the member leaders on the privacy committee at ALA are devoted to working on this issue.

QUESTION: We hosted two CryptoParties in my local library in Northeast England last year. And yes, when a Council person came to do a video to help us promote the event, he did ask, "Are people going to wear masks?" And I said, "No, they are not going to be wearing masks. It is just normal people going to learn about something, but first it is really part of your basic digital skills. You know how to use a mouse and a keyboard. You know how to use browsers. You should be able to know how to protect your privacy and know about online security." That is why actually at our cryptoparties we only attracted the people already aware of those issues.

Our next step is going to be targeting the people who really need to know because they are not aware of those issues. My next steps this year are going to be working with what we call our Silver Service group, which is our older citizens' group who are learning digital skills, so I am definitely going to do a privacy session for them.

We have colleagues also doing that around digital inclusion in less-wealthy areas of the city, so again, it is building both privacy tools and privacy skills within our existing digital skills programs.

ALBERT TUCKER:And also I think you talked about behavioral change. I think you mentioned it as well. I think there is something about culture-change approaches that we should also think about: How do we make this issue popular in terms of language, semantics, and approach? I think there are some different approaches being highlighted here.

QUESTION: Hi. I'm Ron Berenbeim. Just a quick comment and then a question.

Privacy is a matter of expectation, and I think at least this talk has been kind of an eye-opener for me as to the extent to which my privacy may be getting invaded in ways that I didn't know. An example of what I mean by expectation is the article that Joel cited, the famous article by Brandeis and Warren, which was the first legal formulation of privacy, which was the right to be let alone.

There is an interesting backstory on that. Warren was a very famous Harvard Law School professor, and he became enraged when pictures of his daughter's wedding at a local country club appeared in the newspaper. So he got his best pupil, a guy from Kentucky named Louis Brandeis, and they defined the right of privacy for the first time legally, and it became a legal expectation because for most of human history nobody has had privacy. It is a very middle-class notion. The lower classes didn't have privacy; they all lived in one room. The upper classes didn't have privacy because they all had eight or nine servants in every room they went to.

The management of expectations is a key role for people, libraries, or any institutions who want to do something about that. And I have to tell you that even at my tender age, expectations seem to have changed very, very dramatically throughout my life.

My question is this: I did some work on privacy about 20 years ago when it entailed whether or not somebody was reporting to human relations about stuff that maybe shouldn't be going on around the office and that kind of stuff. What I learned was that there was—and what everybody knows—a very real difference in the expectation of privacy between Europeans and Americans.

So now we have a lot of information, a lot of stuff coming down the wire about the European Union, about Britain's likely exit from the European Union, about other countries that may want to get out of the European Union. And the European Union has, as we all know, some people in Brussels who define what a banana should look like and everything else, and they certainly have a lot of regulations about privacy, so that in negotiations to leave the European Union or remain in the European Union or so on, is privacy going to be an issue at all?

WILLIAM PERRIN: I'm William Perrin from the United Kingdom. I'm doing a piece of work for Carnegie UK Trust on Brexit, as it's known, and the digital sphere.

The issue of privacy and data rights in Britain is actually at quite an extraordinary juncture. The country has voted to leave the European Union. It is about to elect—I can probably say this—a fairly rampantly Brexitist government in the current general election, and yet the government has committed to bring in the new EU privacy and data protection legislation during the process of Brexit. It actually put a bill before Parliament to implement the very latest EU privacy and data protection directives because the corporate interests are so colossal.

They have been very clear, and it is a very pragmatic decision by the British government, that if they are to remain a very successful center for technology startups and very large technology businesses, we have to have uniformity with the EU companies with whom we trade in the trade of data.

In that sense, no, it won't come up because they've said they are going to adopt the new EU regime. There is a brand-new EU regime, the General Data Protection Regulation (GDPR), that comes into force in 2018. It is reasonably different to the current regime. But in the eyes of many commentators, it is not up to speed; it can't be; it has been many years in development with the latest developments in machine learning and very large-scale processing. Someone characterized it beautifully the other day: "We're moving from a position where the basis for decision on manipulation of a piece of data used to be a human decision. It will soon become a machine-based decision."

That is not necessarily a bad thing, but it produces some enormous and quite profound consequences which we don't yet understand, let alone that we are not yet capable of regulating for.

ALBERT TUCKER:I think that is the point Bruce is making about where this could go. In classic British tradition, just because it is not going to come up doesn't mean it is not significant.

QUESTION: Lisa Rosenblum. I am the director and chief librarian of Brooklyn Public Library. Thanks for the shout-out. And thank you for the cookie tip. I moved here almost two years ago, and I didn't know that about the black-and-white. I appreciate that.

This is more of a comment, and I'm not sure you can answer it, but just an observation. Having been in the public library business for more years than I'd like to talk about—and I'm not going to retire—what I've noticed is that, as Nate said, the privacy thing, sometimes I think I care about it more than other people do.

But the second thing is that public libraries are sitting on a lot of really great data, and people want that data. Internally people want that data because the reality is that public libraries really have to push their relevancy more now than ever in my career. I'm lucky; I live in a great city and it has a lot of support. But there are public libraries across the country who don't have the support and are trying to get money to survive to fix branches, to buy collections. It is really tempting with all the data we have to use it for marketing purposes, to be cool, to be like Amazon and Facebook, or to be like other high-tech companies, to use that data. I just want to give everybody a reality check. This is the world we live in, and we are sort of, I think, at a crossroads.

I like the privacy stuff we're doing, but the reality is, to me, that is a more significant issue I'm facing in the public library. Do you want to comment?

BRUCE SCHNEIER: I think this is an important consideration because this isn't why—it's not that the companies we're talking about are immoral, but that the business model that is legal allows surveillance. If the head of Google stood up and said, "We're no longer going to spy on our users," he'd be fired and replaced with a less moral CEO because doing what is legal is what we expect from corporations.

So if we're going to change this, it has to come through law, which means you're not going to get corporate surveillance dealt with until you deal with government surveillance. You're not going to get government surveillance dealt with until you deal with fear and this thought that if we spy on everybody we can find the bad guys.

I also want to point out that the Brooklyn Public Library is a great library. The main branch is a fantastic building, and I spent way too much of my childhood there. I do recommend going to Grand Army Plaza and seeing it.

ALBERT TUCKER:So you say marketing does not have to be based on data. Deborah?

DEBORAH CALDWELL-STONE: This is where I said "I'm not going to talk about that now but we'll get to that later," and we've gotten to it. For me that's the greatest issue that I am confronting in working with libraries on privacy issues. You're concerned about it, but I know libraries that are out there using their data for marketing, sometimes with third parties outside the library. We're struggling with this issue.

The privacy subcommittee has pretty well concluded that this is not right, so we're encouraging projects. Becky Yoose at Seattle Public Library is deeply involved in a project to deal with what she calls "data de-anonymization" so that the library will be able to strip as much of the personally identifiable information out of the data but still leave it useful for marketing. It is an initiative that she has been leading now for a year and a half.